SimpleVR

Virtual Reality Authoring Tool

A browser-based virtual reality authoring tool designed for non-technical users and youth to create immersive stories.

This work is currently in progress.

Team: 2 Developers (Ali Momeni, Luke Anderson), 1 Designer (Vikas Yadav/alum), 2 Coordinators (Aparna Wilder, Molly Bernsten)

My role: research, interface design (Spatial/Mobile/Web)

Duration: Ongoing (Jan 2017 - Now)

About SimpleVR: Simple VR (formerly SocialVR Lab) started at ArtFab in the School of Art at Carnegie Mellon University in 2015 as a project of Ali Momeni. Currently, we are developing this project under IRL Labs to get seed funding for commercialization open source it.

The Challenge

While there are many technology-driven solutions for education to make students better storytellers, very few of these solutions are for immersive storytelling and accessible to students at the same time. With the increasing availability of smartphones and inexpensive virtual reality headsets, our challenge was to create a simple tool that will improve the storytelling skills of students from different grades.

How does SimpleVR work?

Users can create their immersive stories by adding text, images, audio on top of 360 panorama images, which creates a room. Then they can connect multiple rooms by creating doors between them.

Storytellers can enhance their stories by guiding the reader in a room through a narrative or add a soundtrack to create a more uniform story through sound as well as gaze-activated sounds.

JANUARY 2019 UPDATE

We've decided to open source this project and renamed it to SimpleVR from SocialVR Lab. While we design and build the next iteration, feel free to create-and-view your immersive stories with our alpha version on both desktop and mobile.

The case study will be updated as soon as possible.

Ongoing Design Process

Ongoing Design Process

Ongoing Design Process

When we started to work on SimpleVR, it consisted of two separate experiences. A web browser experience to create stories, another Unity-based mobile app experience to view stories. Our first design exploration were interaction styles of the annotation hotspots for the mobile app.

Since the most important goal of SimpleVR is accessibility, we designed gaze-only interactions, which would not need any physical interface such as Google Cardboard's fuse button. With the help of our VR developer, we were able to view our reticle and hotspot designs on the device quickly and iterate on them.

On the other hand, we started with defining a visual design system to create a uniform look across the platforms and mediums.

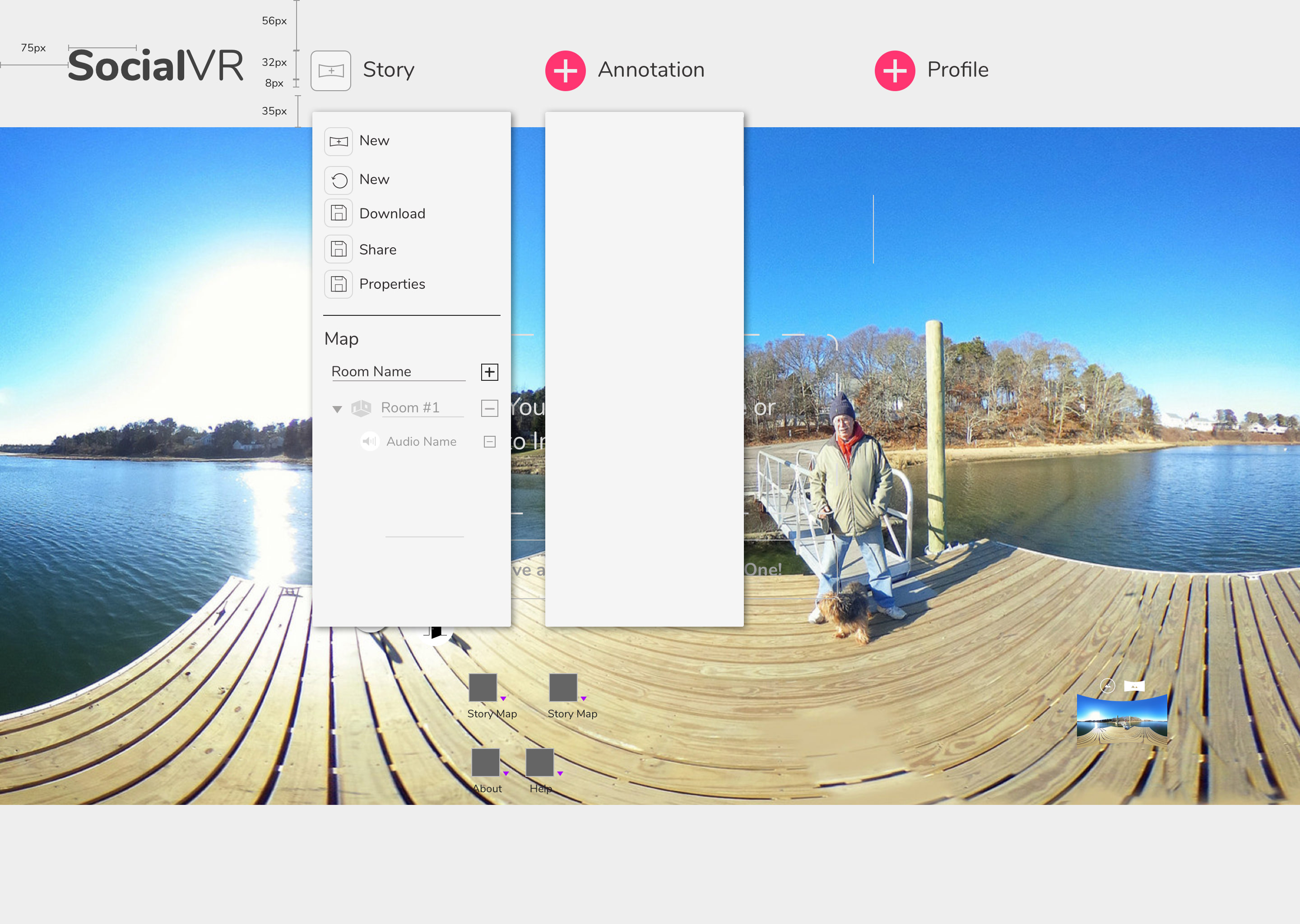

We have explored different layouts for the editor. We researched best practices and used familiar patterns such as drag-and-drop interactions for backgrounds, bounding boxes for active hotspots.

Our early explorations for the editor layout included a drop-down / slide-up style layouts with fixed menus. We decided not to go with a fixed menu bar since it won't work for smaller-displays. To make it work, we would have to decrease the size of the working area and make it responsive.

We have also proposed different functions such as undo/redo, help pages, sharing and downloading a created story.

Some of the functions that we have proposed are implemented and merged as we moved to a cloud powered back-end. Before AWS integration, our users were downloading their stories as .zip files and transfer it to their device to view it. Our later layout explorations also included slide in-out panels, which we iterated on our latest proposal.

Our last iteration for the editor included a content drawer to enable users to manage their uploaded assets among their stories. We went with multi-directional slide-in panels and assign a different function to each direction.

The left panel is for managing stories, the right panel is for managing user details, the bottom panel is for managing assets. We also used our logo as an escape button, which users can learn about the project or ask for help.